2023.01.31

Political science as problem solving

I recently came across a manuscript titled “Methodologies for ‘Political Science as Problem Solving’” via Twitter:

I have a new "methodology big think" essay on the "problem solving" approach to social science. More here, along with a link to a PDF of the essay. I would love to hear what people think.https://t.co/vJI1Looaml

— Cyrus Samii (@cdsamii) January 21, 2023

The manuscript is very well written, and a joy to read. The goal of the paper is to explain what “Political Science as Problem Solving (PSPS)” is, and how it is done (including carefully curated references to the most relevant and recent methodological advances for this purpose). At its root PSPS is defined by its motivations:

The key is to motivate research in terms of questions like “what is the problem here?”, “why does the problem persist?”, and “how can we mitigate the problem?”. By “problem” I mean something that is normatively wrong in the world. This requires normative commitments.

This is not to say that Political Science is, or ought to be, only about problem solving. It is enough that some political scientists do see themselves, at least at times, as problem solvers (or plumbers). If you happen to be one of them, then this is the way to proceed (adapted from the manuscript):

| Stage | Goal | Inference | High Level Technique |

|---|---|---|---|

| Problem definition | Asses importance and persuade skeptics | Descriptive | Normative theory, operationalization, measurement, description and persuasion |

| Problem diagnosis | Identify potential interventions | Causes of effects | Positive theory, encoding casual relations, causal identification, statistical inference |

| Problem remediation | Intervene to mitigate the problem | Effect of causes | Causal synthesis, intervention design, implementation, and analysis. |

The manuscript has much more nuance about each technique. For example, the discussion of strategic incentives and their role in measurement; or the need for better ways to translate formal theories into Directed Acyclic Graphs (DAGs), among other are great. The above table does not do justice to these gems. You should read the manuscript!

The focus of the manuscript is on PSPS methodology. There is frankly little to quibble with here. Instead, I will focus on some of my lingering doubts about this mode of political science research.

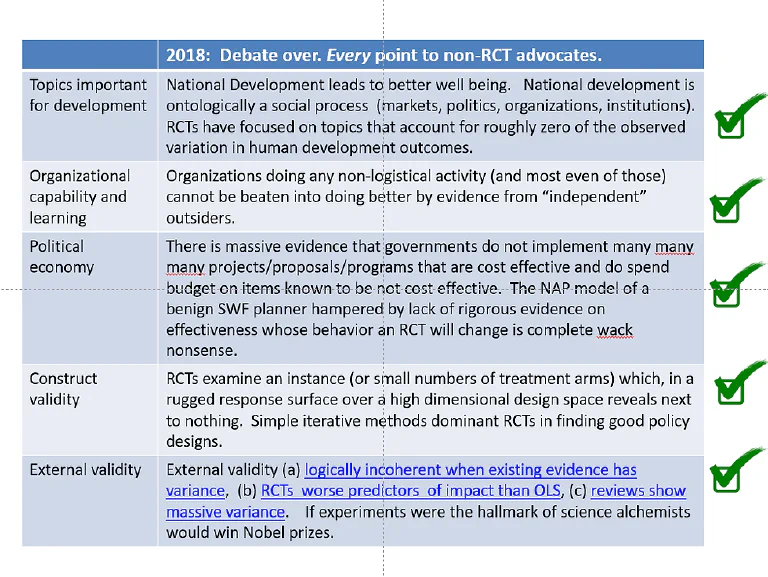

PSPS may be best suited to solving micro-problems, like teacher attendance, than macro-problems, like national development. Lant Pritchett summarizes the critique (with reference to PSPS’s close cousins, the randomistas) in the slide below (see .pptx).

De jure, the tools and techniques of PSPS could apply to macro-problems. De facto, not so much. For example, I could credibly say that my goal is to improve student outcomes in a school district in Haiti, or Afghanistan. That seems feasible, though one should never underestimate how hard even the simplest of problems are. However, I am not sure I could claim with a straight face that my goal is to solve under development in Haiti, or political violence in Afghanistan. These problems are insoluble for a single individual researcher, even if individual efforts, like improving school outcomes, may have some positive impact at the margin.

I don’t think any of this is a criticism of PSPS so much as a warning for would-be practitioners: PSPS is not problem neutral. And that is OK. If other folks want to go after the big macro-problems kudos to them.

Another gem in the manuscript is the motivation and (brief) discussion of persuasiveness. PSPS practitioners need to be persuasive. For two reasons. First,

This is meant as a reminder about the social nature of social science research. It also reflects the fact that problem-solving research programs operate as appeals for how attention and resources should be applied to try to improve the world. Typically such decisions require appeal to collective interests and overcoming skeptics.

Second,

political science is different from classical economics in that the latter focuses on Pareto or Kaldor-Hicks efficiency, which by definition does not require defending the value of interventions that ultimately benefit one group relative to others. By contrast, generic normative criteria that political scientists emphasize, like civic equality, may motivate interventions intended to improve the standing of some individuals relative to others. This difference is often the basis of defining something as “political.”

In other words, persuasiveness is required to mobilize, not just resources, but also opinion. The latter is critical because the solution will often benefit some at the expense of others. Indeed, PSPS could be construed as a political act. Scientific activism is not new but it does bring along some risks.

Engagé political scientists may no longer pretend they are dispassionate observers of Nature. More worryingly, some may be tempted to fabricate data for the good of the cause or, who knows, set up revolutionary tribunals. It would not be the first or last time that noble ends lead to ignoble means.

Arguably, the reason many problems often persist is not lack of proven solutions so much as lack of political support to implement them. If so, politics is inescapable. Researchers trying to solve such problems ought, at least in principle*,* to be focusing on ways to change the political equilibrium. But this could run the gamut. From innocent ways to get-out-the-vote, to sinister techniques of regime change, via voter manipulation. Maybe I am just a worrywart but practitioners of PSPS would be well advised to develop a clear set of principles and code of conduct.

Putting such thorny issues aside, I do agree persuasiveness is critical for any would be PSPS researcher. Yet persuasiveness is universally ignored in most PhD programs (with the possible exception of Public Health programs, where activism is par for the course). My sense is that persuasion, like management, are seen as “practical” skills not worthy of a serious research program, even if they may hold the key to getting any research off the ground, let alone solving problems.

If we agree that many problems fester for lack of buy-in from decision makers and stakeholders, not lack of proven solutions, then we need much more rigorous research on how to get buy-in. In industry, folks swear by Dale Carnegie or Cialdini (I have mandatory HR training to attest to it), though I have not seen the empirical evidence either way.

More recently, I’ve started to question the need to test many of our interventions via randomized controlled trials (as seems implicit in the problem remediation stage of the PSPS framework). In this I have been influenced by the notion that the value of information comes from reducing the risk of decisions, not by proving what works. (In particular, by reading Douglas Hubbard who, as a practical minded person, ought to be required reading for any PSPS practitioner.)

For example, suppose we are back at the the start of the COVID-19 pandemic. Suppose also there are two research teams tasked with tackling the pandemic. The strategy of the first team, the PSPS team, is to explore the space of possible interventions to find what works. They begin by quickly assessing the nature and seriousness of the threat. Next, they review the literature for possible solutions. Finally, they pick good candidates for testing. Then they wait. Weeks. Months. Only when the trial results arrive do they decide to scale up successful interventions.

The strategy of the second team, the FAST team, is to try anything and everything whilst minimizing the risk of a bad decision. For example, if the extant literature, expert consensus, and so on suggest that an an initiative may work, and the risks are acceptably low, then the team scales it right away. They may include a retrospective control group to ex post verify their risk and efficacy assessment, and promptly remove any harms. For the most part, however, they reserve prospective trials for initiatives like vaccines, that have a huge upside but also a potentially huge downside if things go wrong.

My sense is the FAST team would have adopted mask mandates (with the possible exception of children), social distancing, better ventilation, massive rapid testing, and so on way faster than the PSPS team. Sure, they may have also recommended more wonky solutions of dubious effectiveness like travel bans but, hopefully, to little harm. My money, in terms of solving problems and lives saved, is therefore with the FAST team. Though both teams would have been equally keen on testing mRNA, the 800 pound gorilla in terms of effectiveness.

Viewed in this light, maybe the role of the PSPS researcher is not just to determine what works, but also to make prompt and best use of the uncertain evidence. After all, much of our statistical apparatus is precisely about quantifying uncertainty, and informing decisions under uncertainty. If so, why wait for proof?

The FAST approach requires training on decision theory, the value of information, and decisiveness under uncertainty (which, judging by the reactions to the pandemic, appears to be the rarest of characteristics across all levels of government). Of course, such training may be more likely to produce engagé scientists, which may or may not be a good thing. After all, problem solving demands action, so action seems inescapable. One possible escape clause here is to focus your research on tools and techniques for problem solving, as opposed to solving problems directly.

Finally, one aspect that I found missing from the otherwise excellent methodological overview was the role of Artificial Intelligence in the work of PSPS researchers. Large language models may, in time, prove useful to synthesize an increasingly vast literature, and encode it in a DAG. Such computer-aided literature reviews will likely become a core part of the PSPS process.

Today, one way social scientists manage the literature is by focusing ever more narrowly in their field. The excuse is that they are writing for their field audience. Aided with AI I see no reason for any such limitations. Rather, the incentive will be to go big early by summarizing all extant knowledge. Knowing how to work alongside an AI will likely be a key skill.

For example, back when I was in a startup, one of our prospective clients, a health company, was very interested in reducing 30-day patient readmission. Today, you could start by asking ChatGPT3 “What are the causes of 30-day patient readmission?” or even “What could I do to reduce 30-day patient readmission?”. I don’t think the answers would be novel to any expert in the field, and a novice may be fooled by some AI hallucination. Still, the performance is pretty impressive as a first cut, specially considering the AI was not trained for this specific purpose.

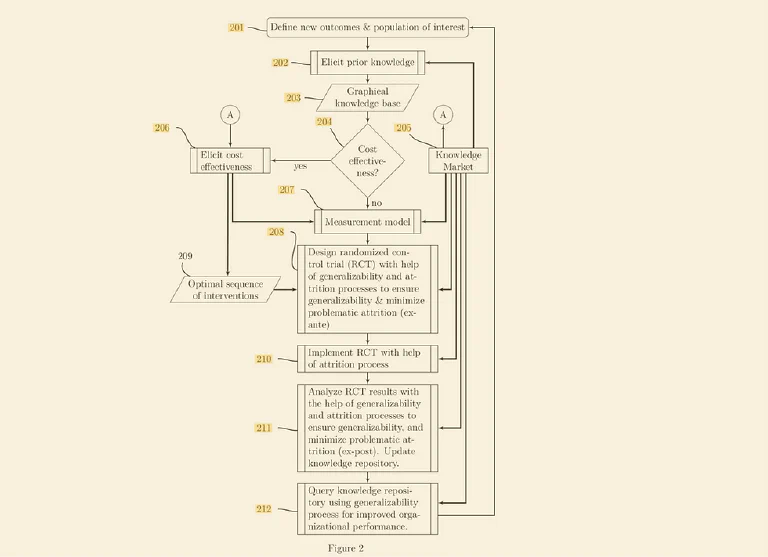

Source: https://patents.google.com/patent/US20160292248A1/en

Source: https://patents.google.com/patent/US20160292248A1/en

Moreover, if the AI can do that, then presumably it also summarize the causal knowledge implicit in a vast literature in the form of a directed acyclic graph. As I wrote in a patent application a while back:

As a simplified illustration a simple text mining engine provided by a third party via the Knowledge Market may perform processes intended to classify a database of texts into those about 30 day patient readmission, and those not about readmission. Using the former, it can implement processes to analyze all sentences in each document, using verbs related to causality to try to parse out sentences about cause and effect from non-causal sentences. Finally, it can analyze the grammatical structure of the former to parse the cause from the effect, and commit its findings to a database, including source document, sentence, tagged structure, and data interpretable by a Knowledge Discovery Graph for display. For example, the phrase “Poor hand-washing increases 30 day readmission rates” includes a verb (increase) often associated with causal language, a cause (hand-washing), and an effect (30 day readmission). Once appropriately parsed, these data can be displayed in a knowledge graph as (hand washing)→(30 day readmission) along with supported documentation accessible by clicking on graph nodes or edges.

Because a DAG is a mathematical object, computers can reason over them, which opens up further possibilities. Pair the DAG with data and now you have an oracle. In fact, it may be a better oracle than one trained on data alone. As Amit Sharma shared in a Twitter thread:

#ChatGPT obtains SoTA accuracy on the Tuebingen causal discovery benchmark, spanning cause-effect pairs across physics, biology, engineering and geology. Zero-shot, no training involved.

— Amit Sharma (@amt_shrma) December 20, 2022

I'm beyond spooked. Can LLMs infer causality? 🧵 w/ @ChenhaoTan pic.twitter.com/qnRAU3nEf3

This is pretty impressive for a general purpose AI never trained to do this specific task!

In conclusion, the manuscript is an invaluable resource for would be PSPS practitioners. However, I am unsure where to draw the line separating the work of a PSPS researcher from that of a (methods trained) activist. And, for better or worse, my sense is hiring faculty will struggle to make this distinction too. Personally, I have much sympathy for PSPS. But I also have some doubts. Caveat emptor.

Enjoyed this post? Subscribe via RSS to get new articles delivered to your feed reader.